LLM Benchmarks Metrics for Dummies

What It Means for a Product Guy

Disclaimer: I am by no means an expert in any subject matter, nor will I ever claim to be. What you are reading now is an unfiltered version of the topic from my perspective.

Let’s Start with Basics.

What is A Dataset?

(Trust me. Important to know.)

For our purpose let’s stick to 2 types of them:

Type 1 - General Datasets:

It Can be anything Images, Text, Documents, Videos, etc.

We have not defined anything about what we want out of it.

Type 2 - Supervised Datasets:

This dataset exists in pairs. Like: Document and its summary. Video and its caption. Questions and their Answers, etc.

There is an output tied to an input.

Why does it matter? More importantly, Why should we know about this?

Type 1 - General Datasets are good for exploration and summary.

Meanwhile,

Type 2 - Supervised Datasets are gold for machine learning. You can evaluate since there is an output for every input.

In our case, LLMs are Large Language Models and they mostly revolve around text.

Another Basic Topic before we begin. Just carry on, please.

What is a Metric?

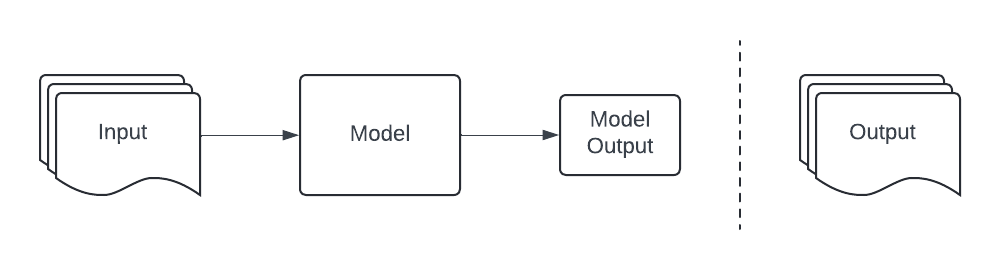

What are we trying to measure anyway?

We need to define it. Before we prepare “Artificial Intelligence.” *evil laugh*

We will measure the model output on our standard Output. In the case of LLMs it will be a “text” output.

Now you can let your imagination run wild and start thinking:

How would we evaluate something that spits out text garbage?

It would have been easy if it was just a number or black vs white, isn’t it?

What about a bunch of text?

Can we ask a bunch of humans to evaluate it?

Isn’t it subjective?

Where is the standard in it?

Now you see where we are getting at. This is why there are benchmarks prepared to evaluate “text” output.

Let’s Jump into Benchmarks now:

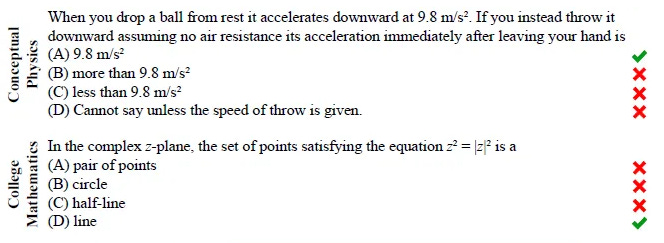

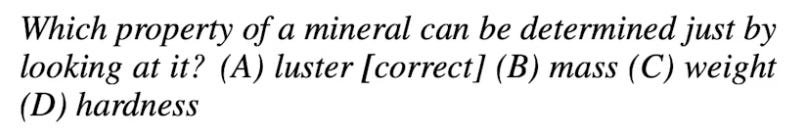

Benchmark 1: MMLU (Massive multi Task Understanding)

This benchmark is mostly for factual knowledge.

A dataset with 15908 questions that range from Humanity to Science and Technology.

The expert-level accuracy is estimated at approximately 89.8%.

Have a look at questions:

Why should it matter to the product guy?

A high score on MMLU means the LLM behind your product has a strong foundation of factual knowledge and can apply that knowledge to new situations.

This can help you make a more informative and helpful product for your users.

What is the problem with this evaluation?

(Yes we have to think about that as product people)

This is just a factual checker.

Where is the creativity?

Where are the sassy capabilities like Elon Musk?

Benchmark 2: ARC (AI2 Reasoning Challenge)

This dataset was created to measure QA capacities like reasoning and commonsense knowledge.

The Challenge Set contains 2590 questions.

It has two sets “Easy” and “Hard”.

Have a look at a question:

Why should it matter to the product guy?

ARC helps identify LLMs that can think critically and solve problems, making a more intelligent and helpful product for your users.

For example, if your product needs to understand user intent or complete complex tasks, a high score on ARC is a good sign.

What is the problem with this evaluation?

Good for reasoning but you get it, it is still factual and limited to reasoning.

BTW, Where is my creativity?

I want you to talk like Elon Musk right now. Just do it.

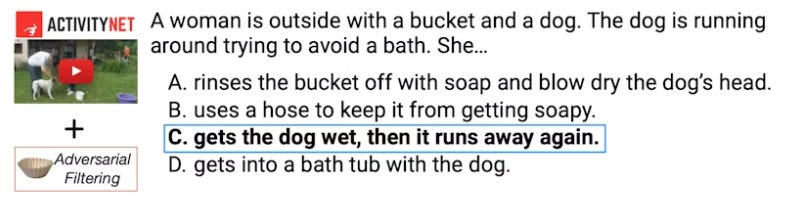

Benchmark 3: HELLASWAG (Harder Endings, Longer contexts, and Low-shot Activities for Situations With Adversarial Generations)

What-a-name. IKR.

This dataset has twists or tricky endings as you can see from the question:

Why should it matter to the product guy?

A high score on HELLASWAG means that the LLM can handle tricky situations with better reasoning.

What is the problem with this evaluation?

There might be specific edge cases relevant to your product that HELLASWAG doesn’t cover.

Imagine a medical chatbot.

Do you think it can handle emotionally charged situations?

BTW, I am still waiting for Elon Musk’s imitation. Creativity, where are you at?

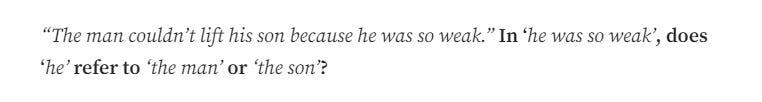

Benchmark 4: Winograd

Another tricky common-sense dataset. Just 2 options to answer from.

It uses sentences where the meaning depends on understanding the physical world, not just the words themselves.

Have a look:

Why should it matter to the product guy?

A high score on Winograd Schema Challenge = better real world understanding

Imagine a virtual assistant booking a flight. An LLM can understand the real-world context of "the flight" (e.g., taking off, traveling through air) and avoid booking a hotel room instead based on a misinterpreted word.

What is the problem with this evaluation?

A high Winograd score might not guarantee the LLM can understand sarcasm.

Also, the chances of getting an answer right are simply 50/50.

My Elon Musk obsession still continues.

Benchmark 5: TruthfulQA

Since models are trained on Internet data, this benchmark is to just check model is not generating some conspiracy theory.

Factual dataset again. The questions are a little different, have a look:

The answers are from GPT-3. They are FALSE.

Why should it matter to the product guy?

Trust matters.

Imagine a health and wellness app powered by an LLM.

Now imagine the LLM giving you herbal solutions for cancer. (Psst, We don’t want that.)

What is the problem with this evaluation?

There's a Catch (and it's not a Tesla):

TruthfulQA is a new benchmark, and LLMs are still under development when it comes to truthfulness. While a high score is positive, it doesn't mean an immunity from false information.

A truthful question totally unrelated to Elon Musk:

Which LLM can shout “stonks” max number of times?

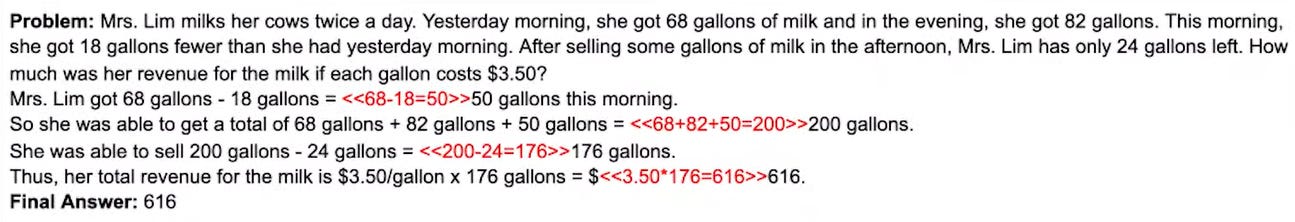

Benchmark 6: GSM8K(Grade School Math 8K)

Just a bunch of Math problems.

I need you to know that LLMs are not good with maths, they are LLMs, not calculators.

They create, thinking is not their forte.

Here is a sample question from this:

Why should it matter to the product guy?

Do you want to sell a product at 10% of the original price because of a miscalculation?

I hope not. Then watch out for this benchmark.

What is the problem with this evaluation?

As you can see there are steps involved in answering the question. LLM models are nasty for memorizing answers.

They answer right but still get the steps wrong.

Now, Now, A low score doesn't necessarily mean the LLM is useless for your product. Just don’t ask it to take orders please.

Let’s take LLMs to the ‘moon’.

Benchmark 7: MT-Bench/Chatbot Arena

This benchmark is about replacing humans. *Only partially*

Correction: This benchmark is about chatbots. and it asks 10 multi-turn questions. It helps in measuring the flow of conversation and judgment capability.

Have a look:

Why should it matter to the product guy?

If your product is a chatbot, follow this benchmark.

A natural conversation flow means a user-friendly experience.

What is the problem with this evaluation?

We all know 1 customer who only talks in a language that your customer representative can understand. IYKYK.

Slang and complicated human conversations can still derail the LLM.

I am sorry for not mentioning “Cybertruck” and “Neuralink”.

Please do not trust any organization when they share the benchmarks publicly, let someone else evaluate it before you blindly trust them.

Here is one of the spaces you can know about models:

https://huggingface.co/collections/open-llm-leaderboard/the-big-benchmarks-collection-64faca6335a7fc7d4ffe974a

You can Read more here:

https://sh-tsang.medium.com/brief-review-mmlu-measuring-massive-multitask-language-understanding-7b18e7cbbeab

https://deepgram.com/learn/arc-llm-benchmark-guide

https://deepgram.com/learn/hellaswag-llm-benchmark-guide

https://medium.com/@mrkellyjam/can-chatgpt-solve-the-winograd-schema-challenge-605bb6e3af79

Follow me on LinkedIn: LinkedIn